Recently, I wrote about merger identification using photometric redshift and how close pairs are a good indication of a rate mergers between galaxies occur at any given epoch of the Universe (see the article here).

And I discussed that there are more ways that one could identify ongoing mergers, most notably from the fraction of galaxies that look ``perturbed’’ i.e. disturbed gravitationally by one of their neighbors. Or just past the point they swallowed a neighboring galaxy.

This was the point of the paper I will be writing about today:

CEERS Key Paper. IX. Identifying Galaxy Mergers in CEERS NIRCam Images Using Random Forests and Convolutional Neural Networks

As you can see from the title, this is a machine learing paper. We also did this the old-fashioned way: asking the whole team to *look* at images and classify. Got to get your training, testing and validation set somehow. Neat thing with this paper is that we used simulated galaxies (we know the truth if they will merge or not) as the training sample.

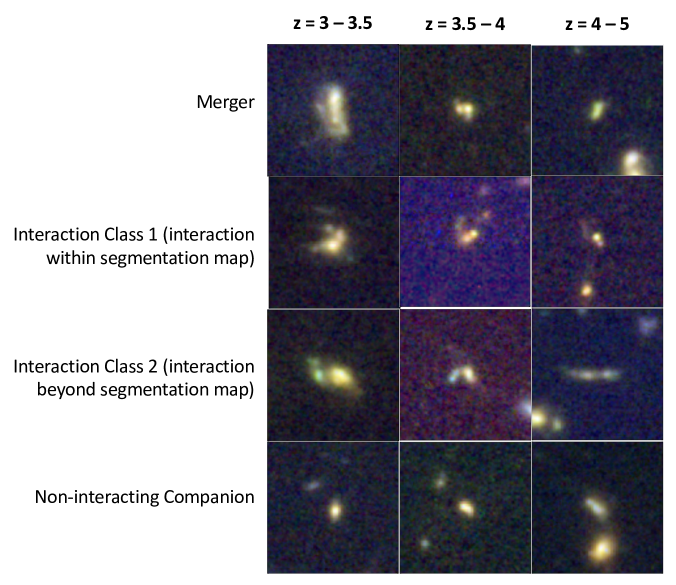

This is the kind of interactions we were looking for: active merger with two galaxies coalescing into one object, visibly still separate but connected objects, two separate objects that have some dark sky between them, and a non-interaction companion (future merger? maybe)

The neat thing about this paper is that we tried several different techniques, including classical ``morphometrics’’ (the shape and appearance of galaxies cast into a series of numbers, I’ve been trying to get everyone to call the ``morphometrics’’ since I think it’s a cool word but at this point I am trying to make ``fetch’’ happen.)

Back to the paper: we tried

- morphometrics

- a random forest classifier (classic Machine Learning)

- a convolutional neural network

This is mostly a machine learning paper so I will go with those first:

Random Forest

This is where you combine a series of ``decision trees’’ (basically a series of yes/no questions to end in a branch (or leaf) of the tree that corresponds to a certain class. Train on your training set, identify which leaves hold merging galaxies, and voila a classification. Don’t like a single tree? Use a bunch of them (computationally cheap) and then average them out: your random forest. This is the algorithm that you apply first. If that doesnt do a so-so job, rethink your input data.

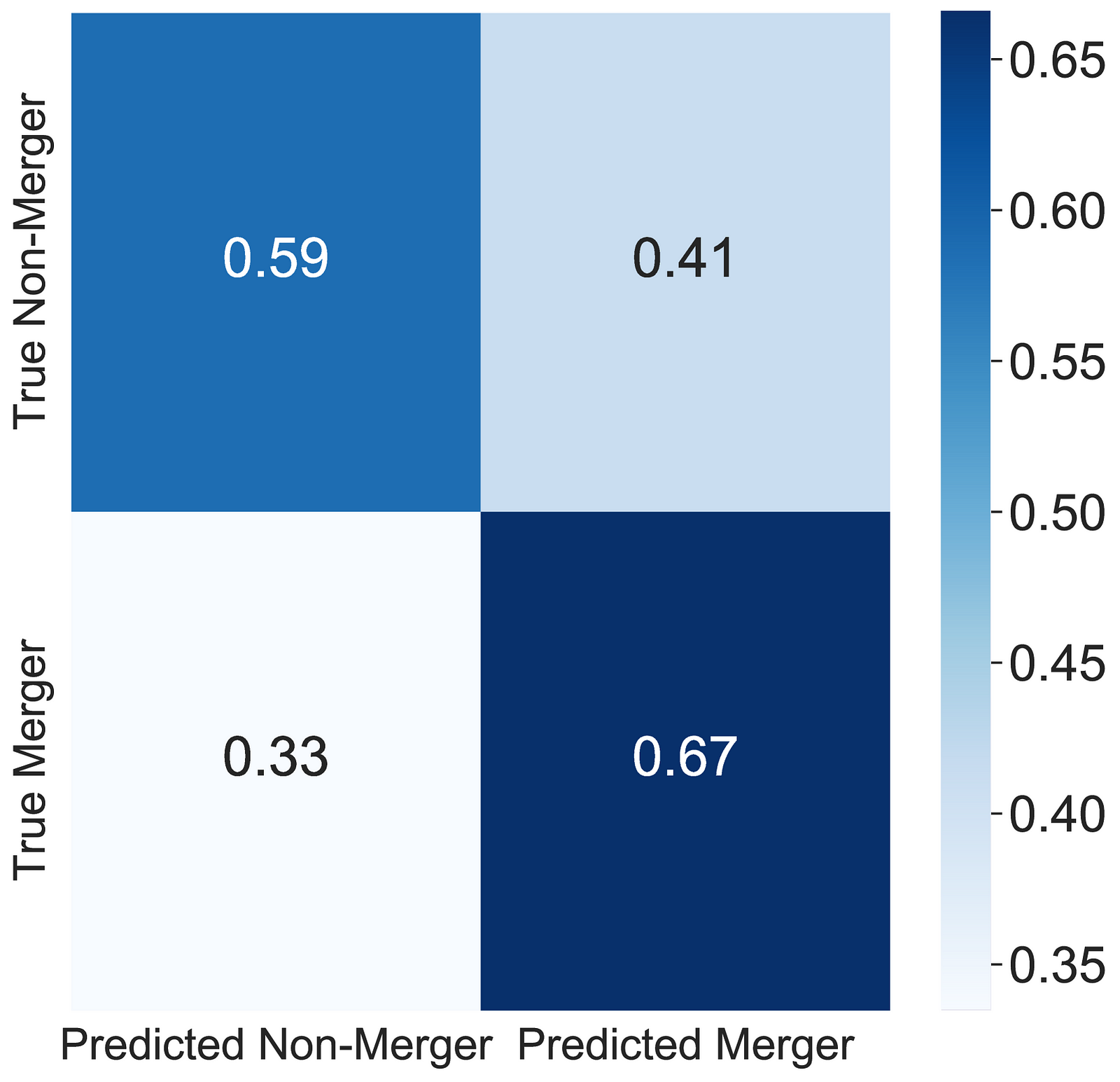

So how did it do?

Not too bad, not really time to stop either. This is more called ``proficient’’. You wouldn’t trust a spellchecker that got it wrong 33% of the time right? We can do better. But it is fair bit better than a random guess.

DeepMerge CNN

In a convolutional neural network, you can use the cutouts from the JWST NIRCam images directly as input. These are then convolved (run through a series of filters like edge-detection etc) and then weighted in a few layers of neurons to produce a merger y/n? answer. Again train on the training set, test and validate.

As the above confusion matrix show, the perfomance is remarkebly similar to the Random Forest. Huh!

Morphometrics

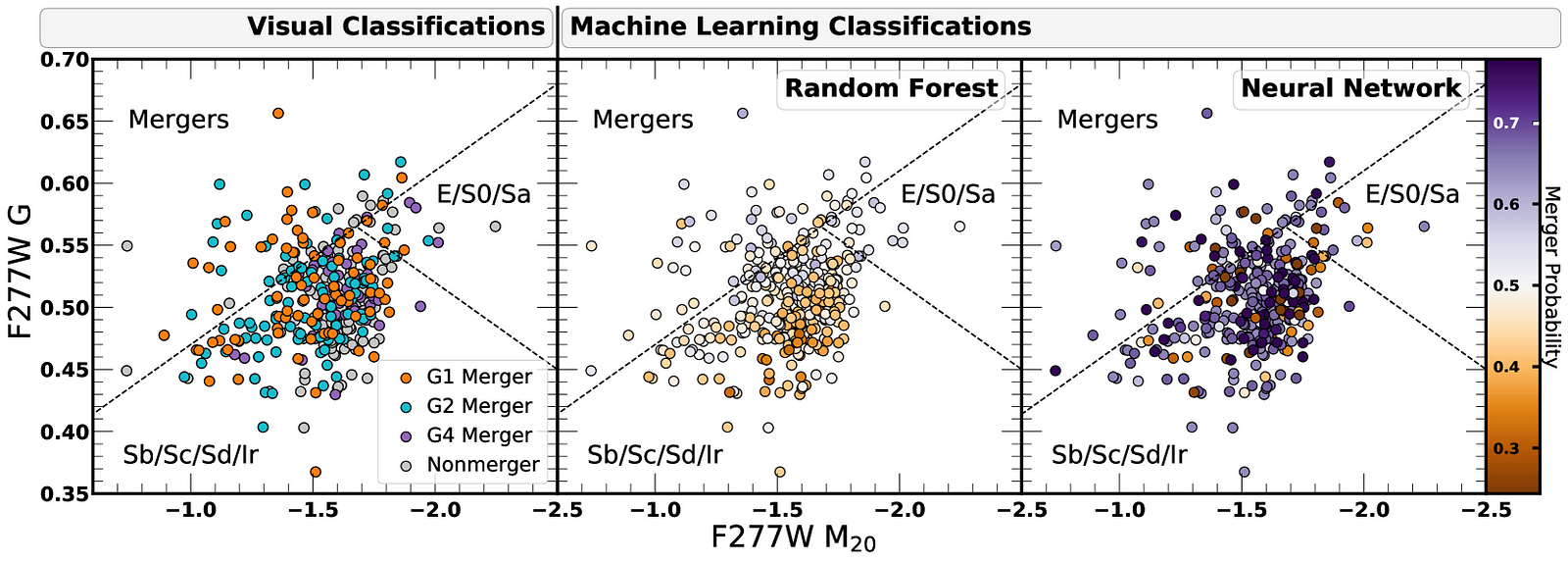

So this is a Gini-M20 based classification that has a particular part of the parameter space that means it is likely a merger.

Below are all three classifications (visual, RF and CNN) on the morphometric plane.

What we are getting from this paper is that each technique varies drastically on what it considers a merger. We had a very similar experience with morphometrics alone but different surveys or morphometric systems (e.g. CAS vs Gini-M20). Even with training based on simulations (where we know whether a pair is going to merge in the 250 million years, we can just run the clock forward in the computer). Classification like this is hard.

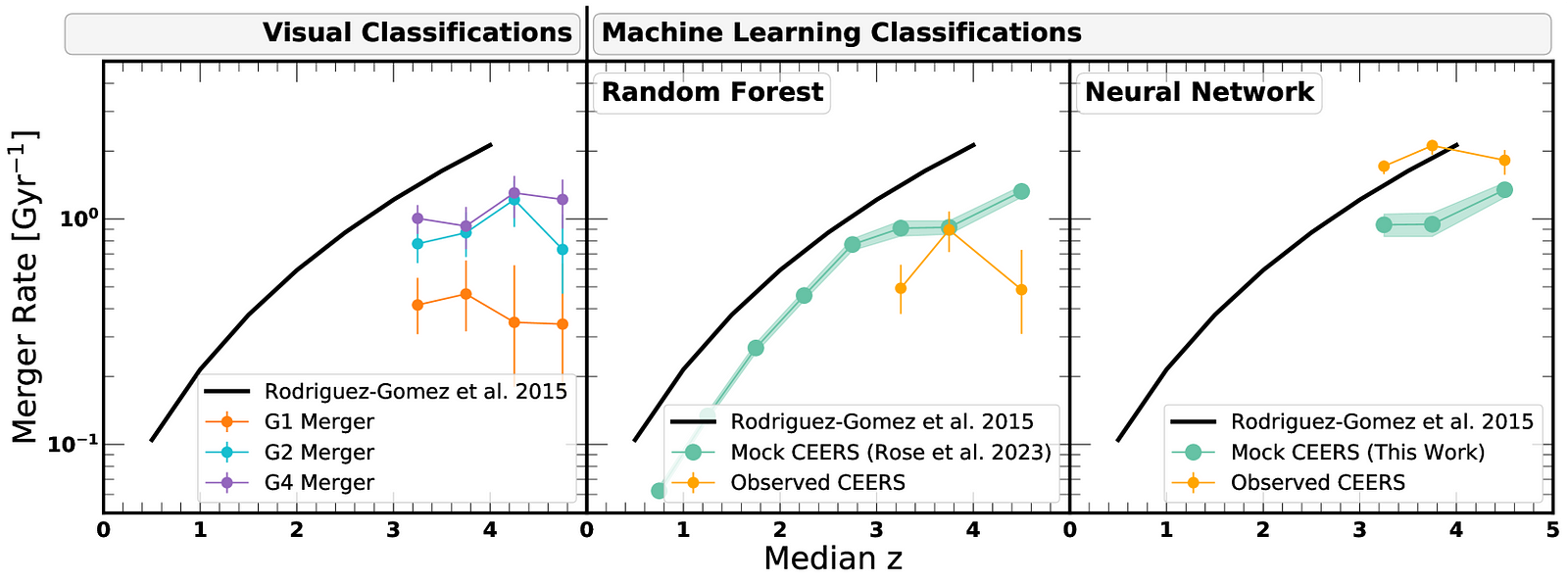

But the good news is that the merger rate inferred at different redshifts is fairly robust and agrees between the techniques.

We are still a ways away from being able to classify individual galaxies on whether or not they’ll merge (definitely in the next Gyr this one) but the fraction and the rate at which the population is merging, that seems pretty robust. I expect that a lot more work will go into galaxy morphology of merging galaxies in the near future with more JWST data and the Euclid Space Observatory now both in full science mode. More and exciting machine learning applications are yet to come!

No comments:

Post a Comment